FINS: Understanding Finger Input in Near-Surface Space

Abstract

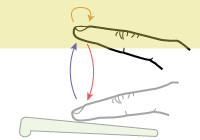

Using the space above desktop input devices adds a rich new input channel to desktop interaction. Input in this elevated layer has been previously used to modify the granularity of a 2D slider, navigate layers of a 3D body scan above a multitouch table and access vertically stacked menus. However, designing these interactions is challenging because the lack of haptic and direct visual feedback easily leads to input errors. For bare finger input, the user’s fingers needs to reliably enter and stay inside the interactive layer, and engagement techniques such as midair clicking have to be disambiguated from leaving the layer. These issues have been addressed for interactions in which users operate other devices in midair, but there is little guidance for the design of bare finger input in this space.In this paper, we present the results of two user studies that inform the design of finger input above desktop devices. Our studies show that 2 cm is the minimum thickness of the above-surface volume that users can reliably remain within. We found that when accessing midair layers, users do not automatically move to the same height. To address this, we introduce a technique that dynamically determines the height at which the layer is placed, depending on the velocity profile of the user’s initial finger movement into midair. Finally, we propose a technique that reliably distinguishes clicking from homing movements, based on the user’s hand shape. We structure the presentation of our findings using Buxton’s three-state input model, adding additional states and transi tions for above-surface interactions.