Multitouch Workshop

Hardware

Hardware-wise, one cool technology developed by Ulrich von Zadow's group at c-base in Berlin allows not only immediate touch detection, but also detection of proximity towards the surface. This enables reliable measuring of the orientation of the finger.

It basically works by beaming IR light not into the acryllic surface itself, but towards its backside. The projection surface acts as a diffusor: the further something is away from the surface, the more diffuse it will be in the output image. The different distance planes can be reliably isolated with specifically calibrated band-pass filters.

Software

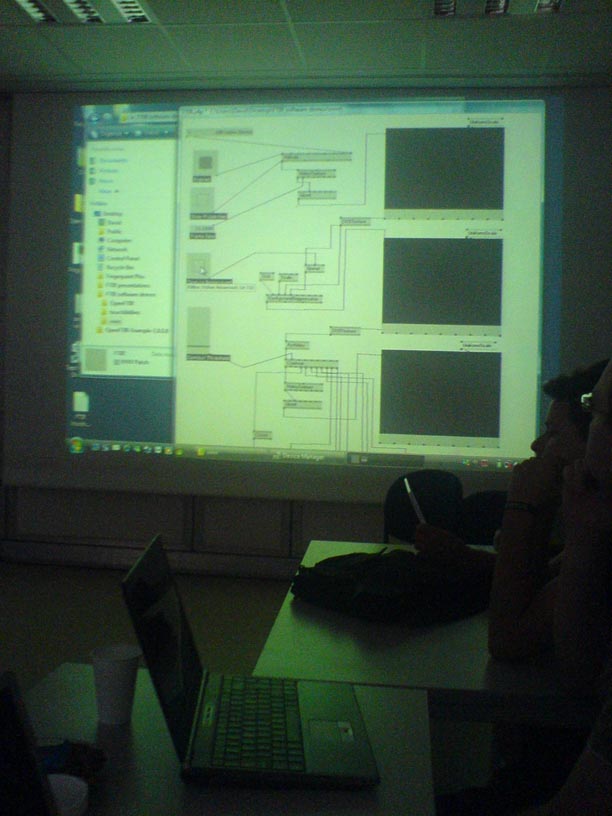

A nice software toolkit for rapid development of prototypes is vvvv. It uses a visual programming paradigm similar to Quartz Composer and Max/MSP and allows to quickly connect blob input data with various visualization methods. Unfortunately, it's Windows only, but maybe plugins could be implemented for Quartz Composer to allow something similar on OSX.

OpenFTIR is a framework by David Smith's Group that's under development right now. It's closely tied to Windows APIs, which allows it to perform quite well:

A 640x480 frame can be processed in about 1.5 ms.

It also computes a motion vector of the touch blobs by simply subtracting the previous frame from the newest frame: The white portion of the blobs show the forward direction, the black portion the backward direction of the movement.

Existing applications

- Photo App

- Painting App

- Musical App (place symbols on a bar)

Application ideas

Virtual keyboard: might benefit from multitouch if technology recognizes difference between finger resting on surface or pushing surface. Then anticipatory movement is possible.

Keep in mind the different usage patterns of the hands; dominant and non-dominant hand are used for different things in real world environment.

Don't do gesture recognition per se, but create an interface with physical properties that natural allow for complex behaviour (introduce causality).

Make behaviour less abstract (e.g. collidable windows). Include real objects (pen, eraser,...).

People usually don't collaborate on the same task at the same time, but mostly work on their own and only come together at specific points. This means the current concept of "only one application has focus" must be replaced to allow for such asynchronous cooperation.

User identification would be tremendously helpful. We simply expect that technology for that will become available in time in some form.

Some way of detecting hovering would also be of advantage (basically pointing without clicking). Ulrichs work could help here.

Photos