Implementation of the Multi-Touch Engine and the Control Gestures

Touch Detection

Basic touch detection and handling is done by the chair's own multi-touch sollution, developed and implemented by Malte Weiß. An application, called the Multi-Touch Agent, is running in the background and handles the transformation of raw camera data into usable touches. Those touches are distributed to all applications declared asTransforming a 3D Space Into a 2D Interactable Surface

Selecting an object in 3D when only interacting on a fixed surface introduces a lot of difficulties, first and foremost occlusions. We follow the approach "what you see is what you get" and we will try to implement the game such that it never offers too much choices to select, we will especially avoid choices that would be unreachable due to occlusion.The game grid is a karthesian space devided into cubes of the same size. Assuming we know the center of such a cube (and we do!) how do we decide, if a touch on the surface belongs to this particular cube?

Here we benefit from our user centered perspective: Since we do all the projection math ourselves and dont leave it to OpenGL, all we need to do is save the current matrix (each frame) and put the 3D point, we want to project, through it. So we take the center of a selectable cube, multiply it with the four by four transformation matrix and get its exact position on the 2D screen.

Touch Areas

For each selectable cube we perform the described process. At the projected 2D point we then add a 2D Surface Object to the TableInteractionEngine, which handles touches and the Touch Areas. Each such object then represents an area on the screen surrounding a selectable cube. The areas currently get drawn as a green sqare and move with the perspective, so they are always centered at the according cube. One can imagine these Touch Areas just as photos on a table, and as such one can click on them to select them and bring them to the front. Furthermore we can use the two-touch turn gesture to rotate these sqares, which we will abuse to control the orientation of the attached pipe.Basic Math and Rotation

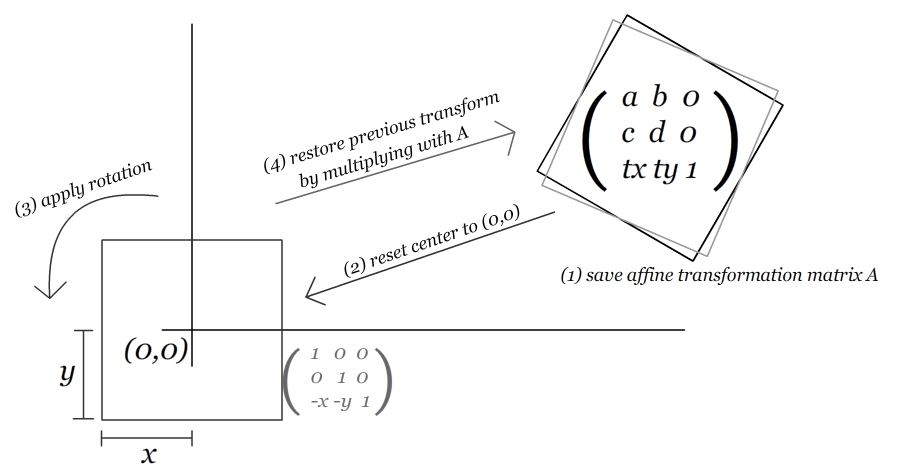

The Touch Areas or Table Object themselves are rectangles, their position and rotation stored in a 2D affine 3x3 transformation matrix. They also have a fixed size. The following picture shows how we manage to rotate a Table Object around its center. From the two touches we received from the MTAgent and assigned to a specific Table Object we calculate how much the user has turned its hand for the gesture. We apply this rotation to the Table Object, after we reset it to the origin of the coordinate system (lower left corner of the screen) and translated it, such that the center of the rect lies on the origin (not the lower left corner). After applying an affine rotation, we reapply the original transform to end up with the desired result.